Introduction to Artificial Intelligence

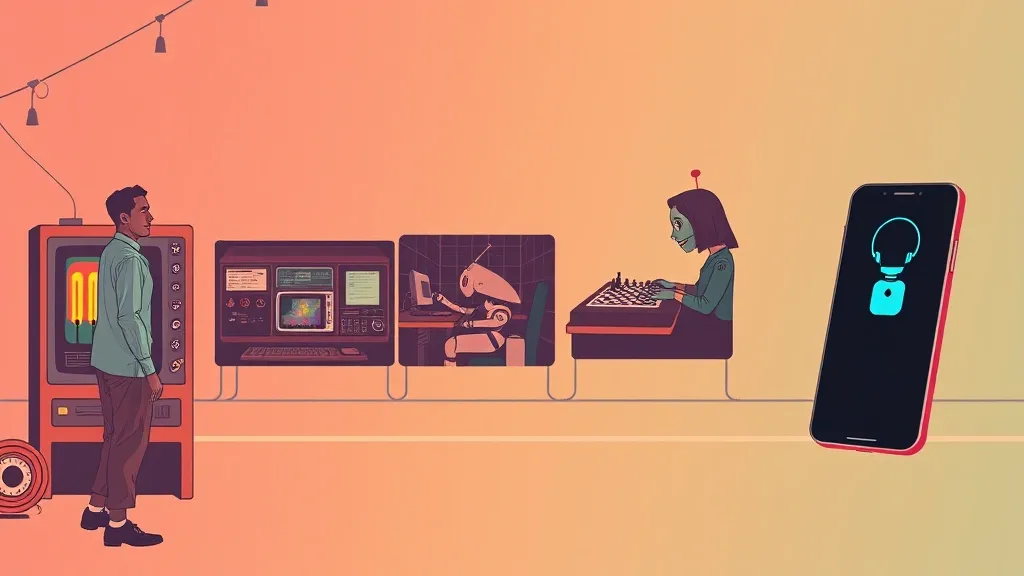

Artificial Intelligence (AI) has evolved from a conceptual framework into a transformative force that influences numerous aspects of modern life. At its core, AI refers to the simulation of human intelligence processes by machines, particularly computer systems. These processes include learning (the acquisition of information and rules for using it), reasoning (using rules to reach approximate or definite conclusions), and self-correction. As we delve into the history and development of AI, it is crucial to understand its foundational concepts and the milestones that have shaped its trajectory.

The roots of AI can be traced back to ancient history, where myths and stories depicted artificial beings endowed with intelligence or consciousness. However, the formal study of AI began in the mid-20th century. The term artificial intelligence was coined in 1956 during the Dartmouth Conference, which is widely regarded as the birth of AI as a field of research. This conference brought together prominent scientists and researchers who aimed to explore the potential of machines to simulate human thinking.

Over the decades, AI has undergone significant transformations, transitioning through various phases characterized by optimism, skepticism, and resurgence. The early years were marked by the development of algorithms and programs that could solve mathematical problems or play games like chess. However, limitations in computing power and theoretical understanding led to periods known as AI winters, where funding and interest waned.

- 1950s-1960s: The initial experiments and the creation of simple neural networks.

- 1970s-1980s: The rise of expert systems, which utilized rule-based reasoning.

- 1990s: Renewed interest with advancements in machine learning and data availability.

- 2000s-Present: The explosion of deep learning, big data, and AI applications across industries.

Today, AI encompasses a wide range of technologies, including machine learning, natural language processing, computer vision, and robotics. Its applications are vast, affecting industries such as healthcare, finance, transportation, and entertainment. As we explore the question of when artificial intelligence started, we will uncover the pivotal moments and breakthroughs that have paved the way for the AI-driven world we inhabit today.

Early Developments in AI (1950s-1970s)

The inception of artificial intelligence (AI) can be traced back to the mid-20th century, a period marked by significant theoretical advancements and pioneering research. The 1950s and 1960s laid the groundwork for the field, establishing foundational concepts that would shape future AI developments.

In 1956, the Dartmouth Conference is widely regarded as the birth of AI as a formal discipline. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, this conference gathered the leading minds of the time to discuss the potential of machines to simulate human intelligence. The term artificial intelligence was coined during this gathering, signaling a shift in focus towards creating machines that could think and learn.

During the late 1950s, several key programs emerged that demonstrated the capabilities of early AI. One of the most notable was the Logic Theorist, developed by Allen Newell and Herbert A. Simon in 1955. This program was designed to solve problems in symbolic logic and is often considered the first AI program. Following closely in 1956 was the General Problem Solver (GPS), which aimed to solve a wide range of problems using a heuristic approach.

The 1960s saw the development of more sophisticated AI systems. In 1966, Joseph Weizenbaum created ELIZA, an early natural language processing program that mimicked human conversation. ELIZA’s ability to engage users in dialogue highlighted the potential for machines to understand and respond to human languages, a concept that continues to be a focal point in AI research today.

Moreover, the introduction of perceptrons by Frank Rosenblatt in 1958 marked the beginning of neural networks, laying the groundwork for later advances in machine learning. Although interest in neural networks waned in the 1970s due to limitations in computational power and theoretical understanding, the groundwork had been laid for future breakthroughs.

In summary, the early developments in AI during the 1950s and 1970s were characterized by groundbreaking research and the establishment of fundamental concepts that continue to influence the field. The vision set forth by early pioneers paved the way for the rapid advancements in AI technology that would follow in subsequent decades.

The AI Winter and Resurgence (1980s-2000s)

The period from the 1980s to the early 2000s is often characterized by a notable decline in artificial intelligence research and funding, commonly referred to as the AI Winter. This term describes a phase where expectations were not met, leading to skepticism about the feasibility of AI technologies.

Several factors contributed to this downturn:

- Overhyped Expectations: The ambitious predictions made by AI pioneers in the 1960s and 1970s created unrealistic expectations. When these predictions did not materialize, funding agencies and investors grew disillusioned.

- Technical Limitations: The computational power available during this period was insufficient to handle complex AI tasks. Limitations in algorithms and data availability hampered progress in machine learning and natural language processing.

- Increased Competition: As the field of computer science evolved, other disciplines such as robotics, cognitive science, and neuroscience began to gain attention, diverting resources away from AI research.

Throughout the 1980s and 1990s, many AI projects were discontinued, and funding was significantly reduced. However, this period also laid the groundwork for future advancements. Researchers continued to work on foundational concepts, which would later prove essential for the resurgence of AI in the 21st century.

By the late 1990s, the landscape began to shift. The advent of the internet and the exponential growth of computational power opened new avenues for AI research. Key developments during this resurgence included:

- Data Availability: The explosion of data generated by online interactions provided the necessary fuel for machine learning algorithms to improve.

- Advancements in Algorithms: Breakthroughs in neural networks and other machine learning techniques, such as support vector machines and ensemble methods, led to more effective AI models.

- Increased Investment: Both private and public sectors recognized the potential of AI, leading to a renewed influx of funding and interest in the field.

By the turn of the millennium, AI research was experiencing a renaissance, setting the stage for the incredible advancements that would define the 21st century. This resurgence not only revitalized interest in AI but also marked the beginning of a new era where artificial intelligence would become integral to various sectors, including healthcare, finance, and transportation.

Modern AI and Its Future

In recent years, the landscape of artificial intelligence (AI) has evolved dramatically, transitioning from theoretical concepts to practical applications that permeate diverse sectors. Modern AI encompasses a range of technologies, including machine learning, natural language processing, and computer vision, which are driving innovations across industries such as healthcare, finance, and transportation.

One of the defining characteristics of contemporary AI is its ability to learn from vast amounts of data. This capability is primarily facilitated by deep learning algorithms that mimic the structure and function of the human brain. As these algorithms are fed with more data, their performance improves, leading to increasingly sophisticated applications. For instance, AI systems are now capable of diagnosing medical conditions, predicting financial trends, and even driving vehicles autonomously.

The future of AI holds immense potential, but it also raises important ethical and societal questions. As AI technologies continue to advance, several key trends are likely to shape their trajectory:

- Increased Automation: As AI systems become more capable, the potential for automation across various job sectors will expand. This could lead to increased efficiency and productivity but may also result in job displacement for certain roles.

- Enhanced Personalization: AI is set to revolutionize the customer experience by providing highly personalized services, from tailored marketing strategies to customized healthcare plans based on individual needs and preferences.

- Ethical AI Development: The growing awareness of the ethical implications of AI will drive the development of frameworks and regulations to ensure responsible AI usage. This includes addressing biases in AI algorithms and ensuring transparency in decision-making processes.

- Interdisciplinary Collaboration: Future advancements in AI will likely involve collaboration between various fields, including computer science, psychology, and neuroscience, to create more robust and human-like AI systems.

In conclusion, the modern era of AI presents both exciting opportunities and significant challenges. As we move forward, it is crucial to strike a balance between harnessing the capabilities of AI technologies and addressing the ethical considerations that accompany their integration into society. The future of AI is not just about technological advancement; it is also about fostering a responsible coexistence between humans and intelligent machines.